1. Introduction to OmpSs¶

OmpSs is a programming model composed of a set of directives and library routines that can be used in conjunction with a high level programming language in order to develop concurrent applications. This programming model is an effort to integrate features from the StarSs programming model family, developed by the Programming Models group of the Computer Sciences department at Barcelona Supercomputing Center (BSC), into a single programming model.

OmpSs is based on tasks and dependences. Tasks are the elementary unit of work which represents a specific instance of an executable code. Dependences let the user annotate the data flow of the program, this way at runtime this information can be used to determine if the parallel execution of two tasks may cause data races.

The goal of OmpSs is to provide a productive environment to develop applications for modern High-Performance Computing (HPC) systems. Two concepts add to make OmpSs a productive programming model: performance and ease of use. Programs developed in OmpSs must be able to deliver a reasonable performance when compared to other programming models that target the same architectures. Ease of use is a concept difficult to quantify but OmpSs has been designed using principles that have been praised by their effectiveness in that area.

In particular, one of our most ambitious objectives is to extend the OpenMP programming model with new directives, clauses and API services or general features to better support asynchronous data-flow parallelism and heterogeneity (as in GPU-like devices).

This document, except when noted, makes use of the terminology defined in the OpenMP Application Program Interface version 3.0 [OPENMP30]

1.1. Reference implementation¶

The reference implementation of OmpSs is based on the Mercurium source-to-source compiler and the Nanos++ Runtime Library:

- The Mercurium source-to-source compiler provides the necessary support for transforming the high-level directives into a parallelized version of the application.

- The Nanos++ runtime library provides the services to manage all the parallelism in the user-application, including task creation, synchronization and data movement, and provide support for resource heterogeneity.

1.2. A bit of history¶

The name OmpSs comes from the name of two other programming models, OpenMP and StarSs. The design principles of these two programming models form the basic ideas used to conceive OmpSs.

OmpSs takes from OpenMP its philosophy of providing a way to, starting from a sequential program, produce a parallel version of the same by introducing annotations in the source code. This annotations do not have an explicit effect in the semantics of the program, instead, they allow the compiler to produce a parallel version of it. This characteristic feature allows the users to parallelize applications incrementally. Starting from the sequential version, new directives can be added to specify the parallelism of different parts of the application. This has an important impact on the productivity that can be achieved by this philosophy. Generally when using more explicit programming models the applications need to be redesigned in order to implement a parallel version of the application, the user is responsible of how the parallelism is implemented. A direct consequence of this is the fact that the maintenance effort of the source code increases when using a explicit programming model, tasks like debugging or testing become more complex.

StarSs, or Star SuperScalar, is a family of programming models that also offer implicit parallelism through a set of compiler annotations. It differs from OpenMP in some important areas. StarSs uses a different execution model, thread-pool where OpenMP implements fork-join parallelism. StarSs also includes features to target heterogeneous architectures while OpenMP only targets shared memory systems. Finally StarSs offers asynchronous parallelism as the main mechanism of expressing parallelism whereas OpenMP only started to implement it since its version 3.0.

StarSs raises the bar on how much implicitness is offered by the programming model. When programming using OpenMP, the developer first has to define which regions of the program will be executed on parallel, then he or she has to express how the inner code has to be executed by the threads forming the parallel region, and finally it may be required to add directives to synchronize the different parts of the parallel execution. StarSs simplifies part of this process by providing an environment where parallelism is implicitly created from the beginning of the execution, thus the developer can omit the declaration of parallel regions. The definition of parallel code is used using the concept of tasks, which are pieces of code which can be executed asynchronously in parallel. When it comes to synchronizing the different parallel regions of a StarSs applications, the programming model also offers a dependency mechanism which allows to express the correct order in which individual tasks must be executed to guarantee a proper execution. This mechanism enables a much richer expression of parallelism by StarSs than the one achieved by OpenMP, this makes StarSs applications to exploit the parallel resources more efficiently.

OmpSs tries to be the evolution that OpenMP needs in order to be able to target newer architectures. For this, OmpSs takes key features from OpenMP but also new ideas that have been developed in the StarSs family of programming models.

1.3. Influence in OpenMP¶

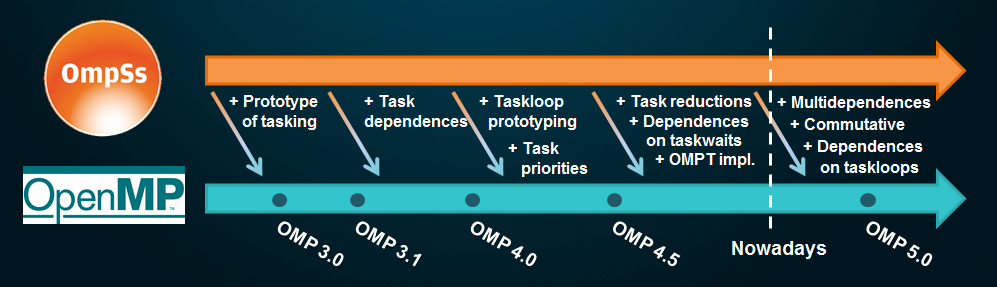

Many OmpSs and StarSs ideas have been introduced into the OpenMP programming model. The next figure summarizes our contributions:

Starting from the version 3.0, released on May 2008, OpenMP included the support for asynchronous tasks. The reference implementation, which was used to measure the benefits that tasks provided to the programming model, was developed at BSC and consisted on the Nanos4 run-time library and the Mercurium source-to-source compiler.

Our next contribution, which was included in OpenMP 4.0 (released on July 2013), was the extension of the tasking model to support data dependences, one of the strongest points of OmpSs that allows to define fine-grain synchronization among tasks.

In OpenMP 4.5, which is the newest version, the tasking model was extended with

the taskloop construct. The reference implementation of this construct was

developed at BSC. Apart from that, we also contributed to this version adding

the priority clause to the task and taskloop constructs.

For the upcoming OpenMP versions, we plan to propose more tasking ideas that we already have in OmpSs like task reductions or new extensions to the tasking dependence model.

1.4. Glossary of terms¶

- ancestor tasks

- The set of tasks formed by your parent task and all of its ancestor tasks.

- base language

- The base language is the programming language in which the program is written.

- child task

- A task is a child of the task which encounters its task generating code.

- construct

- A construct is an executable directive and its associated statement. Unlike the OpenMP terminology, we will explicitly refer to the lexical scope of a constructor or the dynamic extent of a construct when needed.

- data environment

- The data environment is formed by the set of variables associated with a given task.

- declarative directive

- A directive that annotates a declarative statement.

- dependence

- Is the relationship existing between a predecessor task and one of its successor tasks.

- descendant tasks

- The descendant tasks of a given task is the set of all its child tasks and the descendant tasks of them.

- device

- A device is an abstract component, including hardware and/or software elements, allowing to execute tasks. Devices may be accessed by means of the offload technique. That means that there are tasks generated in one device that may execute in a different device. All OmpSs programs have at least one device (i.e. the host device) with one or more processors.

- directive

In C/C++ a #pragma preprocessor entity.

In Fortran a comment which follows a given syntax.

- dynamic extent

- The dynamic extent is the interval between establishment of the execution entity and its explicit disestablishment. Dynamic extent always obey to a stack-like discipline while running the code and it includes any code in called routines as well as any implicit code introduced by the OmpSs implementation.

- executable directive

- A directive that annotates an executable statement.

- expression

- Is a combination of one or more data components and operators that the base program language may understand.

- function task

In C, an task declared by a

taskdirective at file-scope that comes before a declaration that declares a single function or comes before a function-definition. In both cases the declarator should include a parameter type list.In C++, a task declared by a

taskdirective at namespace-scope or class-scope that comes before a function-definition or comes before a declaration or member-declaration that declares a single function.In Fortran, a task declared by a

taskdirective that comes before a theSUBROUTINEstatement of an external-subprogram, internal-subprogram or an interface-body.- host device

- The host device is the device in which the program begins its execution.

- inline task

In C/C++ an explicit task created by a

taskdirective in a statement inside a function-definition.In Fortran, an explicit task created by a

taskdirective in the executable part of a program unit.- lexical scope

- The lexical scope is the portion of code which is lexically (i.e. textually) contained within the establishing construct including any implicit code lexically introduced by the OmpSs implementation. The lexical scope does not include any code in called routines.

- outline tasks

- An outlined task is also know as a function tasks.

- predecessor task

- A task becomes predecessor of another task(s) when there are dependence(s) between this task and the other ones (i.e. its successor tasks. That is, there is a restriction in the order the runtime must execute them: all predecessor tasks must complete before a successor task can be executed.

- parent task

- The task that encountered a task generating code is the parent task of the new created task(s).

- ready task pool

- Is the set of tasks ready to be executed (i.e. they are not blocked by any condition).

- sliceable task

- A task that may generate other tasks in order to compute the whole computational unit.

- slicer policy

- The slicer policy determines the way a sliceable task must be segmented.

- structured block

- An executable statement with a single entry point (at the top) and a single exit point (at the bottom).

- successor task

- A task becomes successor of another task(s) when there are dependence(s) between these tasks (i.e. its predecessors tasks) and itself. That is, there is a restriction in the order the runtime must execute them: all the predecessor task must complete before a successor task can be executed.

- target device

- A device onto which tasks may be offloaded from the host device or other target devices. The ability of offloading tasks from a target device onto another target device is implementation defined.

- task

- A task is the minimum execution entity that can be managed independently by the runtime scheduler (although a single task may be executed at different phases according with its task switching points). Tasks in OmpSs can be created by any task generating code.

- task dependency graph

- The set of tasks and its relationships (successor / predecessor) with respect the correspondant scheduling restrictions.

- task generating code

- The code which execution create a new task. In OmpSs it can occurs when

encountering a

taskconstruct, aloopconstruct or when calling a routine annotated with ataskdeclarative directive. - thread

- A thread is an execution entity that may execute concurrently with other threads within the same process. These threads are managed by the OmpSs runtime system. In OmpSs a thread executes tasks.